Like it or not, Google gets you most of the traffic to your website. And hence, it's paramount that you get your website indexed, crawled and ranked by Google. Nothing is more frustrating to a new website owner than waiting, and wishing and praying that your site gets indexed.

I know!

Because I've been through it recently.

Errors in Google search console

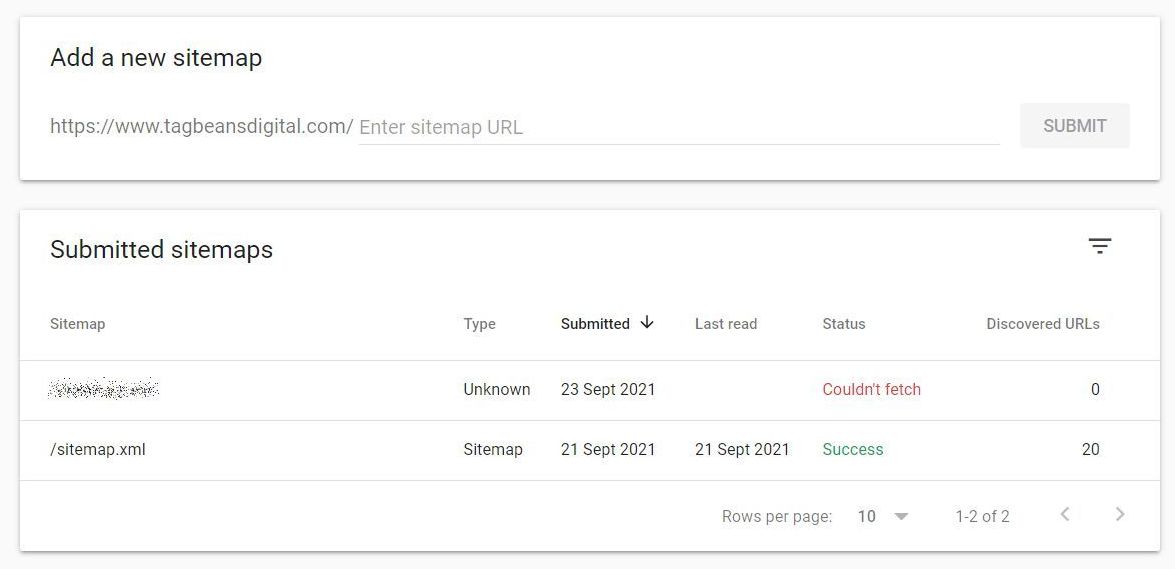

I had created my website and hosted it on Godaddy. Added Google analytics code and started collecting data in GA. Created a new property in Google search console, and submitted the XML sitemap.

That's when the problems started.

Search console could not fetch my Sitemap

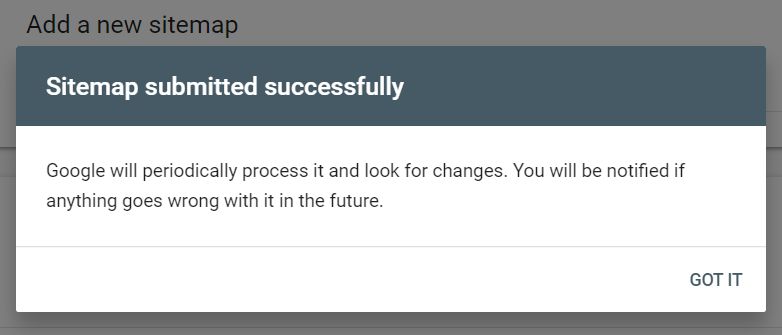

As soon as the sitemap was added, Search console would show a message saying that it has been submitted successfully.

But in the status, it said that Search Console 'Couldn't fetch' the sitemap.xml.

URL inspection tool

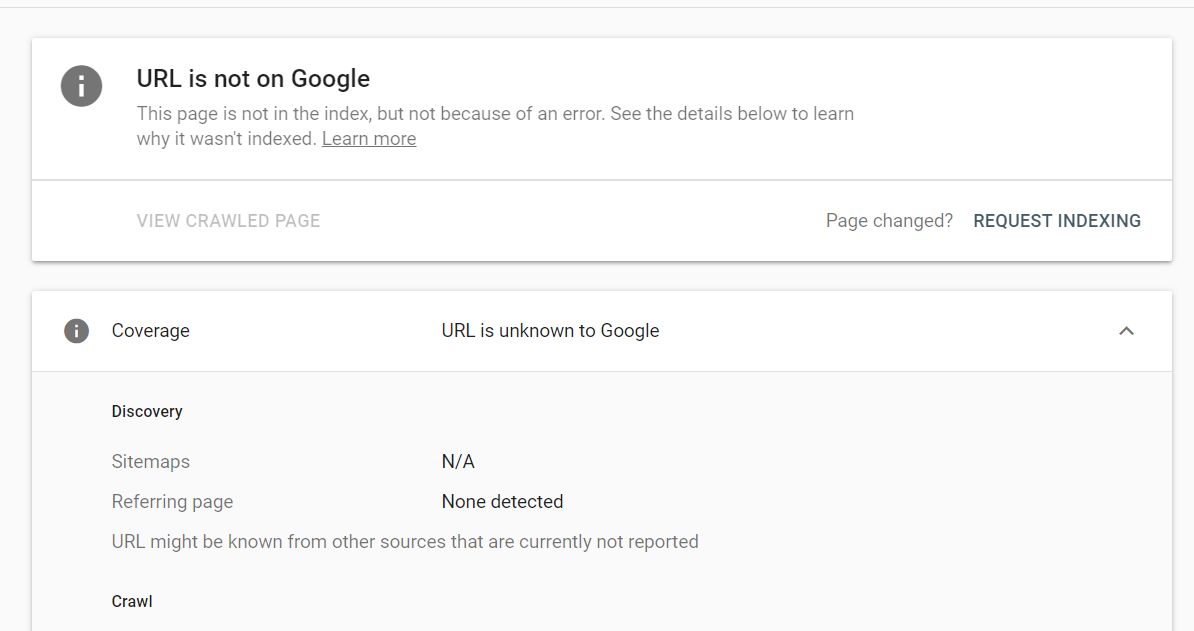

URL inspection tool said that my URL is not on Google

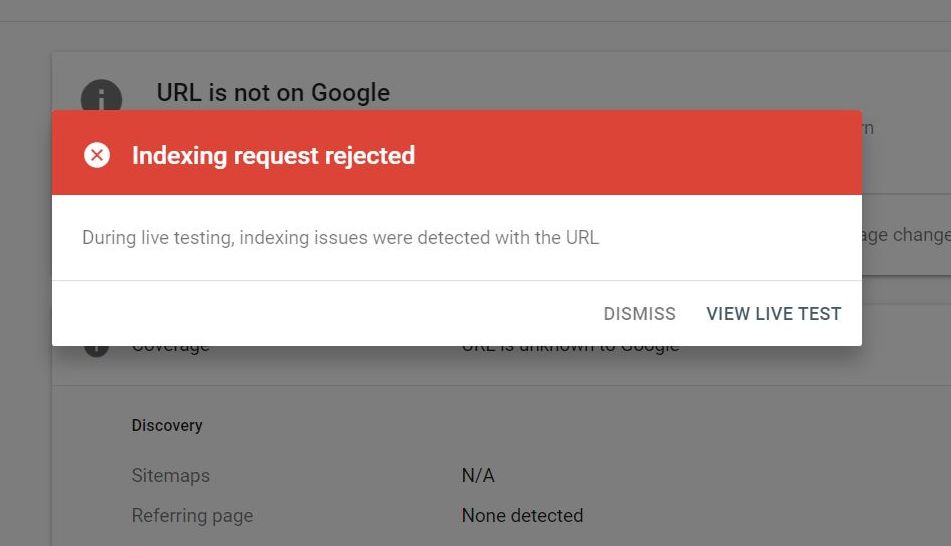

I tried to Request indexing, and got the error that 'Indexing request is rejected.

It was not clear whether this was an error from my end, or just a delay in indexing.

After a lot of research, here are some of the things I did.

How to solve Google Search Console errors in Indexing

Wait patiently for 4-5 days

Sometimes, it's just that Google Search Console will take some time to index your website, and accept your Sitemap.

Check every day, but wait for 4-5 days after your sitemap submission. Well, this is not something I did. I panicked, reached out to friends who are into SEO and digital marketing, researched hundreds of forums, and walked around in circles pulling out my hair.

And based on that experience, I can tell you, no harm in waiting for 5 days.

Create more properties on Search console

There are 2 types of properties on Search console, Domain property and URL prefix property. Domain property is recommended in case you would later add sub-domains to your main website.

I had first created a domain property. So I tried creating a URL prefix property as well. In fact, I created 2 of them, one with www and the other one without www.

Try submitting sitemaps and fetching URLs on other properties too. It did not work for me, but there are things I tried while I waited.

Check your robots.txt file

Robots.txt is a text file that tells search engines which pages to crawl and which pages it should not crawl. It is very easy to create a robots.txt file.

Open a new file in Notepad and paste this code in it.

------------------------------------

User-agent: *

Allow: /

Sitemap: http://www.example.com/sitemap.xml

-----------------------------------

Save the file in your root folder and upload it online. It basically means that you ok with all search engine bots crawling your website. If you want to stop search engines from crawling a page or a folder in your website, you can add lines of code for that as well.

------------------------------------

Disallow: /wp-admin/

Disallow: /templates.php

-----------------------------------

Try generating sitemap from a different website

There are many automatic sitemap.xml generators online, like www.xml-sitemaps.com, www.seoptimer.com/robots-txt-generator, en.ryte.com/free-tools/robots-txt-generator/ that can help you generate sitemap.xml files.

I had used xml-sitemaps.com. It is a simple straightforward process, that is unlikely to cause any errors.

But if your Sitemap is not accepted in Search Console after many days, it would help to try out a different website to generate your XML sitemap.

Try getting a link from a high-traffic website

This is easier said than done. It is easy to get links from spam directories, and sites of questionable repute. If you have access to a website that gets crawled and indexed frequently, add a link there to guide search engines to your website.

Check your website for obvious errors

While you are on your website, press F12 to go to Web developer tools. You can also right-click on the page and select Inspect to access the web developer panel. This area can list out obvious errors on your website, that have to be rectified to enable better crawling.

These should help get your website accepted by Search Console and indexed by Google. But to keep the Google bot interested in your website:

- Keep adding fresh content in the form of pages or blog posts

- Keep editing existing pages to update irrelevant content

- Keep working on your social media profiles to generate traffic

- Keep producing useful content that other websites would link to

- Keep your digital marketing skills up to date

So which of these strategies worked for me?

Well, I am not entirely sure if waiting patiently is the only thing I should have done. After trying these options and waiting for 4 days, my sitemap.xml was accepted, and my website was indexed.

This is my personal experience in getting my website indexed by Google. Hope these tips help you in your journey too.

For more information on digital marketing strategies, read our blockbuster Digital Marketing Powerbook.

If you would like help getting your website indexed and ranked, reach out to TagBeans Digital today.