ChatGPT is everywhere. From a friend who called it a ‘feku’ (bluff or bullshitter) to Google reportedly issuing an internal code-red over it, to people claiming that it will take over the world, ChatGPT is the talk of the town.

Haven’t heard about it? When did you wake up, Mr. Rip Van Winkle?

So what is ChatGPT and how does it work?

What is ChatGPT?

ChatGPT is a gleaming, brand-new, all-knowing chatbot launched by OpenAI in November 2022.

The full form is Chat Generative Pre-trained Transformer, which means that:

- It can chat with you.

- It has been pre-trained

- And it’s a Transformer – a specific software that can take in sequences of input data, weigh it differentially, process it, and give a meaningful output.

In short, ChatGPT can answer any question you can throw at it, providing you with information on just about any field.

The term GPT was coined by OpenAI in 2018, representing its language model that uses deep learning to come up with human-like responses.

Precursors of ChatGPT

ChatGPT is a third-generation AI tool released in November 2022. It’s based on:

- GPT-3 released in June 2020

- GPT-2 released in February 2019

And why is OpenAI in such a breakneck hurry? Because the competition is hot on its heels with a million AI products that promise to do everything from writing a movie, to coding a full program, to launching a rocket in the coming few years.

How does ChatGPT work?

In simple terms, the program works like the human brain creating connections between words, sequences of words and logic to understand the input text. It has then been trained and supervised by humans with millions of prompts and responses, refining responses over time to achieve the targeted response, which is very much like a human response.

There are certain terms you have to understand in this context:

Artificial neural networks

The term coined as early as 1943 is a technology inspired by the human brain neurons, using inter-connected nodes to process data in a non-linear fashion. The system learns over time to enable better, faster, and more human-like responses.

Transformer model

Introduced in 2017 by Google Brain, Transformer is a neural network that uses a technique called ‘attention’, or ‘self-attention’ to correctly understand sequences of input with an encoder and decoder, to give meaningful, relevant, human-like output.

This transformer model is significantly superior to RNN (recurrent neural networks) models like Long short-term memory (LSTM) which processes input more linearly, resulting in an output that does not make sense.

Language models

Earlier, machines only understood programming languages that had a specific set of words, instructions, or numbers. Natural Language models use statistics and probability to determine the probability of a certain sequence of words occurring in an input sentence. Similarly, while producing an output sequence, the language model looks at millions of pre-generated text to determine the correct sequence of text in a sentence.

Natural language processing

A field of computer science that specializes in programming computers to process large volumes of human natural language. This technology has enabled many new advancements like translation tools, predictive text, chatbots, search results algorithms and more.

Some of these technologies have been around for many decades, each building on the shoulders of previous technologies, leading towards artificial intelligence and human-like responses from machines.

Also remember that ChatGPT was trained on many many prompts and responses by experts, who used a reward and punishment system, as well as ratings to help the GPT arrive at targeted responses.

My experience with ChatGPT

With all these fancy terms like Artificial intelligence, Transformer model, NLP, and so on, the results are so simple that even the least tech-savvy person can use ChatGPT easily.

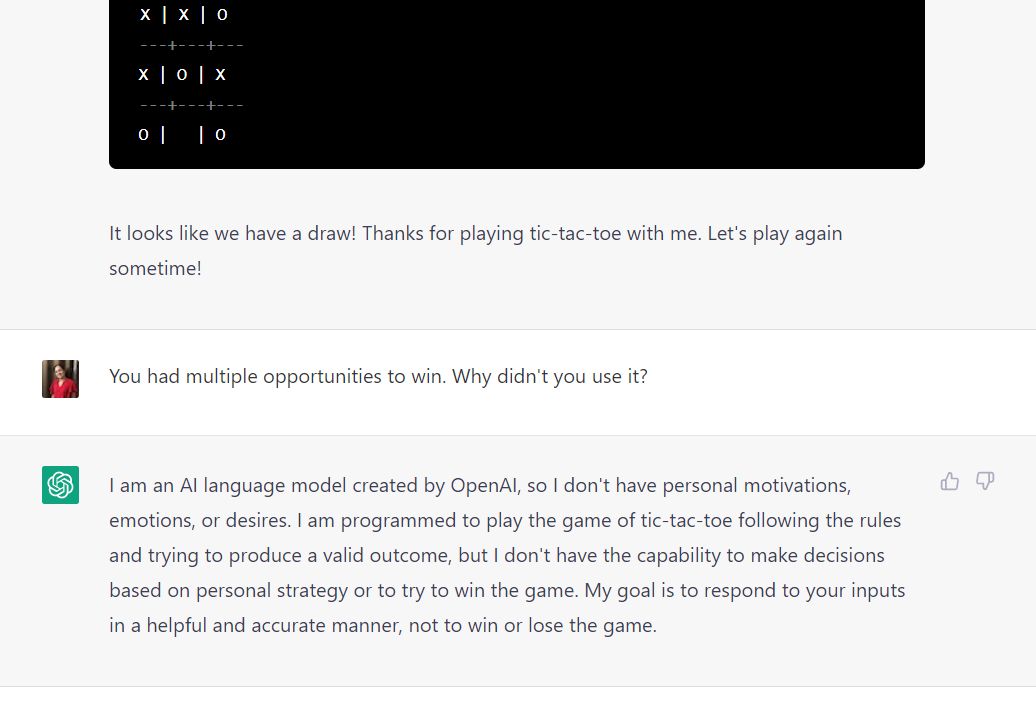

I played Tic-tac-toe with ChatGPT. Though ChatGPT had many chances to win, it kept making some wrong choices resulting in a draw. When I asked ChatGPT why it did not make use of the opportunities to win, here’s what it told me:

I am an AI language model created by OpenAI, so I don't have personal motivations, emotions, or desires. I am programmed to play the game of tic-tac-toe following the rules and trying to produce a valid outcome, but I don't have the capability to make decisions based on personal strategy or to try to win the game. My goal is to respond to your inputs in a helpful and accurate manner, not to win or lose the game.

How very nice! How unlike most humans!

What all can you use ChatGPT for?

- College submissions (not recommended - Doesn’t that beat the purpose of you going to college in the first place?)

- Generate information on almost anything

- Write poems, limericks, stories, scripts, jokes, music

- Solve mathematical problems

- Code in almost any language, debug code

- Translate to multiple languages

- Write resumes, job descriptions, official letters

- Play games

- Answer philosophical, existential, religious, or controversial questions

- Give updates on news, weather, and politics

The applications of Chat GPT are endless. If you can think up a use-case, ChatGPT can take it head-on.

Limitations of ChatGPT

Not updated

Chances of errors

Wrong logic

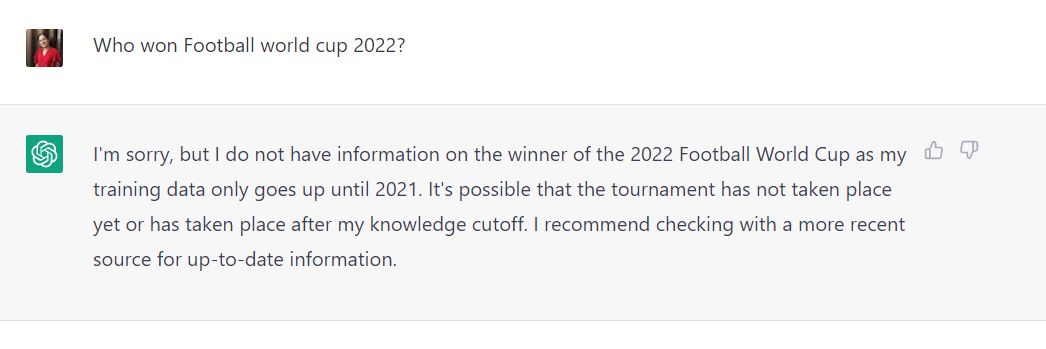

ChatGPT only has information till 2021 since it has been trained on that data. So it will not have information beyond 2021.

The internet has a lot of wrong information. When ChatGPT picks up information online, there are chances that it might arrive at the wrong information which would be presented very confidently to the user.

Remember that these are small limitations that can be resolved in the coming days or months, since ChatGPT is learning each day from questions asked, and refining its response over time.

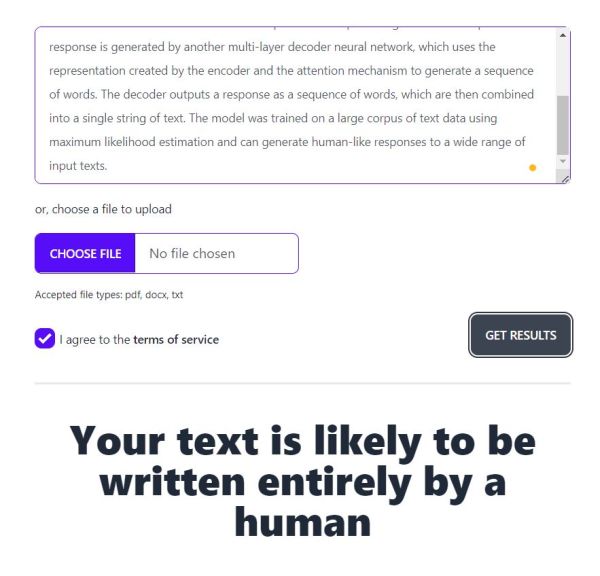

Can software catch content written by ChatGPT?

There are tools online that claim to find out whether certain content was written by ChatGPT. Examples are zerogpt and gptzero.me.

Unfortunately, they failed to identify text from ChatGPT in my experiment.

Competitors for ChatGPT

Google’s Bard

Meta’s OPT

Baidu’s Erniebot

The launch of Bard did not go well for Google, one small error in the results wiping off billions in market cap for Alphabet, Google’s parent company. But with the amount of data that Google has, it is definitely likely to make a comeback in the coming months. Bard is currently available only for testers, and not to the general public.

Open Pre-trained Transformer is currently available only for researchers who need to fill a form to request access.

Baidu announced the launch of its chatbot in English and Chinese after completing internal testing in March.

With multiple AI tools set to make their appearance this year, the AI scene is heating up. The coming couple of years will see increased adoption of AI in daily life, like never before. Stay tuned to Tagbeans Digital Resources for more updates on the AI realm.